Salt Lake City, Utah, January 30, 2025

AI is changing the world at lightning speed, but are we thinking enough about the risks? At Utah Tech Week, a powerhouse panel tackled this question head-on. Can we balance innovation with responsibility? Government, business, and academia experts shared their insights on how AI can be developed ethically while still driving progress.

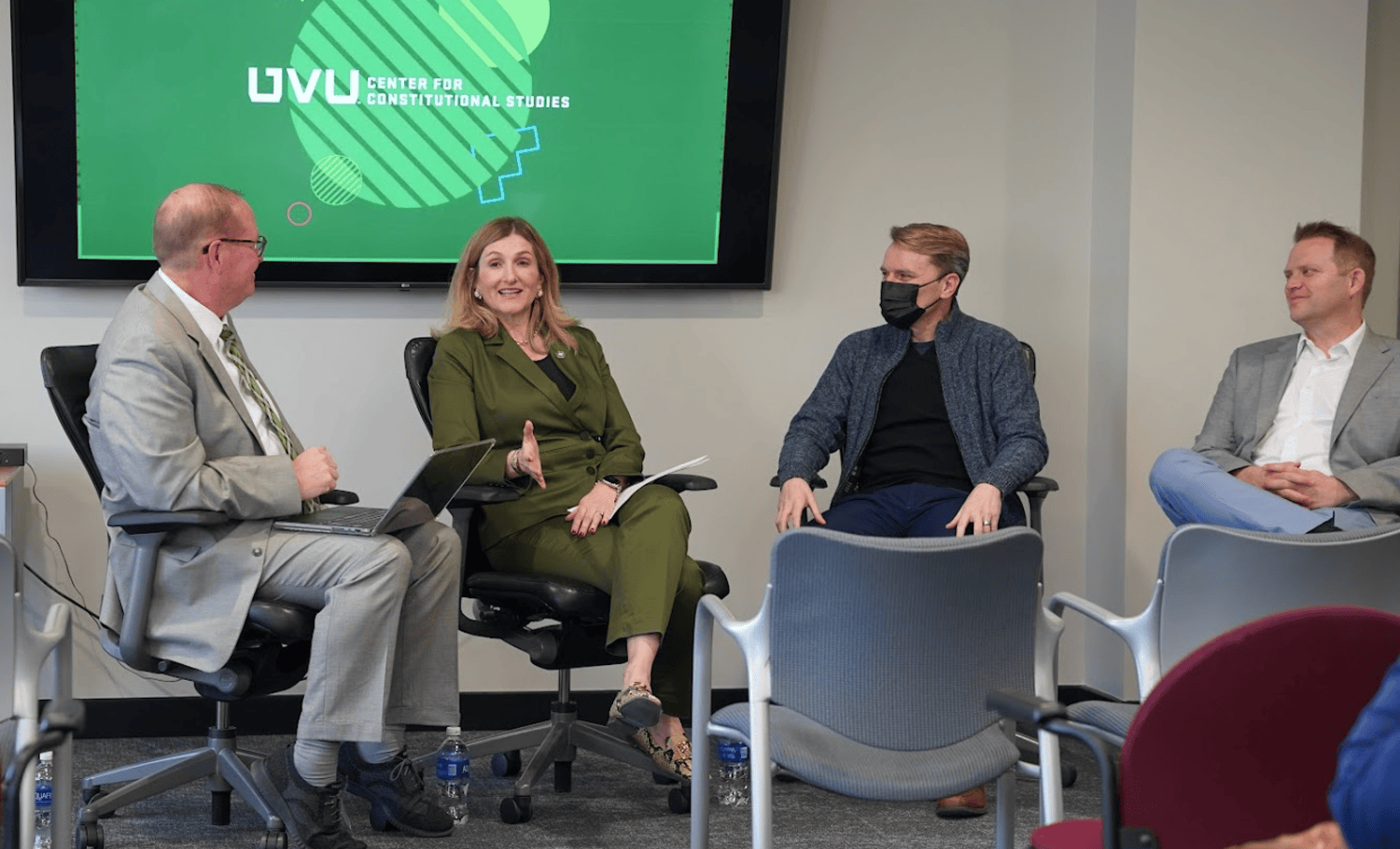

Hosted by the Utah System of Higher Education (USHE), the January 29, 2025 event featured three well-informed panelists: Margaret Busse, Executive Director of the Utah Department of Commerce; Nate Walker, Principal Investigator at the AI Ethics Lab at Rutgers University; and Dave Barney, CEO of ElizaChat, representing the tech sector. Together, they dove into AI’s ethical implications, its role in mental health, and the policies shaping its future.

It made sense for this panel to take place at the Utah System of Higher Education, as it oversees the state’s 16 public colleges and universities. The USHE Board, appointed by the governor, helps shape higher education policies and initiatives. USHE's goal is to strengthen education while preparing Utah’s workforce for the future—an especially relevant mission as AI disrupts and reshapes industries.

The Balancing Act: AI Innovation vs. Regulation

Utah has adopted a unique approach to AI regulation—fostering growth while prioritizing consumer protection. Margaret Busse outlined how the state is pioneering AI policy with a “light-touch” regulatory framework. This approach ensures AI companies can continue innovating while still maintaining ethical guardrails.

One of Utah’s key initiatives is the Office of AI Policy, which monitors AI trends, advises policymakers and helps companies navigate compliance. Busse broke down the guiding principles behind Utah’s AI strategy: privacy, consent, accountability, and transparency. In short, Utah wants to foster AI innovation—but not at the expense of public trust.

“The default AI business model doesn’t have to be surveillance-driven,” said Busse. “We can choose to build something better—something that respects user privacy and puts the consumer first.”

AI, Ethics, and Consumer Protection

Dave Barney, CEO of ElizaChat, an AI-driven mental health platform, spoke about the need for responsibility in AI development.

“AI ethics isn’t a limitation—it’s the key to long-term success,” he emphasized.

Barney explained the concept of technical debt—the trade-offs developers make when building AI quickly, often at the cost of quality or ethics. While some compromises are necessary in tech startups, Barney argued that AI ethics should never be one of them. His company ensures that all mental health recommendations made by ElizaChat are reviewed by trained psychologists, a step many AI-driven platforms skip.

Nate Walker expanded on this by introducing the idea of self-reflective technology—AI that adapts to human values. He envisions a future where AI assistants help individuals uphold their personal and professional values, almost like a digital conscience. Imagine an AI that reminds you to proofread an email before sending it or filters out content that doesn’t align with your beliefs.

“We need AI that enhances, not replaces, human judgment,” Walker said. “It should be a tool that helps people live according to their values, not dictate their decisions.”

AI’s Role in Supporting Vulnerable Populations

One of the most promising—and challenging—aspects of AI is its ability to serve vulnerable populations. Whether it’s providing mental health support, breaking down language barriers, or offering educational resources, AI has the potential to bridge gaps in access and equity.

ElizaChat, for example, offers 24/7 support to users struggling with mental health challenges, something that traditional therapy can’t always provide. Research shows that mental health crises, in particular, peak at night when human professionals aren’t always available.

But with great potential comes great responsibility. Walker, who has worked extensively in AI ethics, stressed the importance of building AI that doesn’t reinforce biases or exclude marginalized communities. He pointed out that AI training data often skews toward wealthier, English-speaking populations, which can result in biased recommendations and a lack of cultural awareness.

“We need AI that’s designed with a global perspective,” Walker said. “If we don’t consider diverse viewpoints and ethical frameworks, we risk building AI that serves only a select few.”

Legal Guardrails for AI Development

As AI evolves, so do the policies surrounding it. Busse discussed how Utah is leading the way in balancing innovation with ethical responsibility. The Office of AI Policy is already working on initiatives to address data privacy, generative AI in mental health, and consumer protections.

One of Utah’s innovative strategies is its regulatory mitigation program, which allows AI companies to receive regulatory exemptions in exchange for adopting responsible AI practices. ElizaChat was the first company to take advantage of this initiative, demonstrating that startups can prioritize ethics without stifling innovation.

Walker, who consults with OpenAI on AI ethics, emphasized that regulations should focus on actual harms rather than hypothetical dangers. He noted that AI is already being used in criminal sentencing, hiring processes, and healthcare decisions—all areas where bias can have real-world consequences.

“We’re not talking about some distant sci-fi scenario,” said Walker. “These issues are happening now, and we need thoughtful, proactive regulation to address them.”

As the discussion wrapped up, Busse left the audience with a powerful message: AI responsibility isn’t just about avoiding harm—it’s about building something better.

“AI should reflect American values: innovation, fairness, and responsibility,” she said. “If we get this right, we can create technology that not only drives economic growth but also improves lives.”

The panelists agreed that the key to responsible AI isn’t just regulation—it’s public engagement, transparency, and a commitment to ethical innovation. As AI continues to evolve, the Utah tech community has an opportunity to set the standard for responsible AI development.

For entrepreneurs, policymakers, and tech leaders, the message was clear: AI’s future is in our hands. It’s up to us to build it responsibly.

- To learn more about the Office of Artificial Intelligence Policy & Learning Laboratory or to apply for regulatory mitigation, visit ai.utah.gov.

- To learn more about UVU’s AI programs click here. Apply to UVU's Applied AI Apprenticeship Program by contacting Rachael Hutchings at 801-863-4965 or rhutchings@uvu.edu

- Learn more about Rutgers University's AI Ethics Lab by clicking here.

- For more information on Elizachat, click here.