July 8, 2024, Salt Lake City

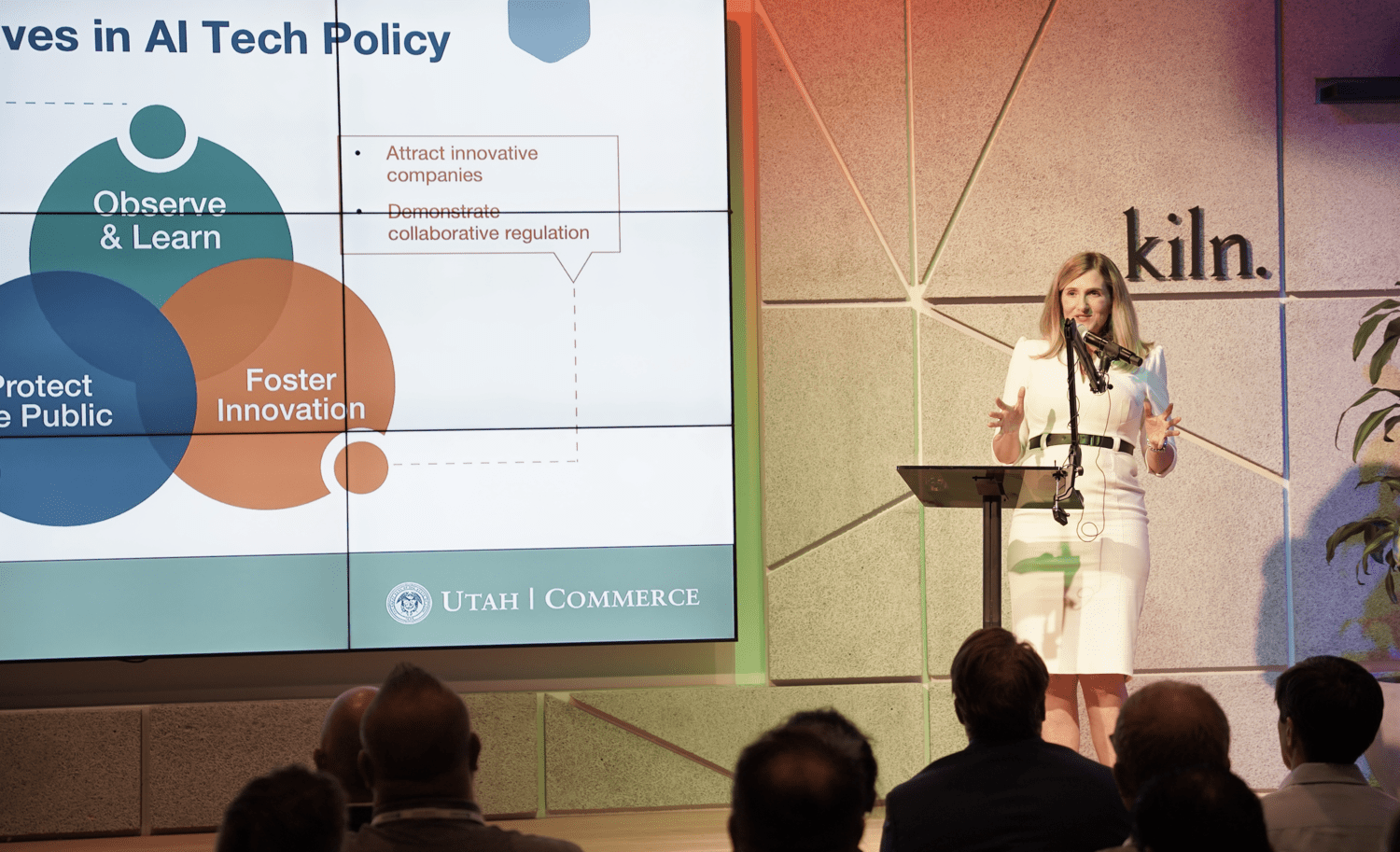

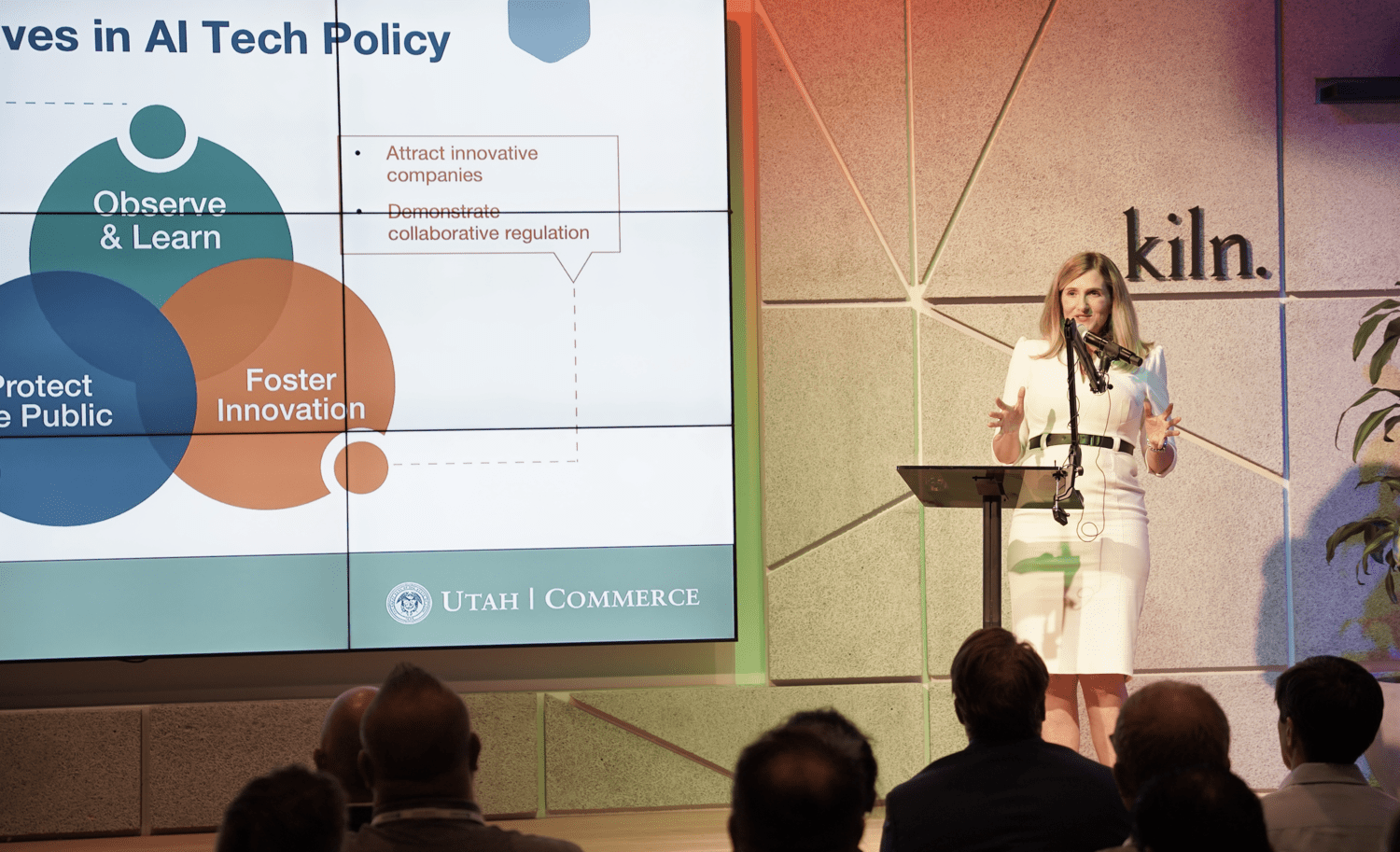

Today Utah's Office of Artificial Intelligence Policy & Learning Laboratory, (UOAI), an initiative of the state's Department of Commerce, launched its AI analysis and regulation activities. The announcement took place at Kiln Gateway in downtown Salt Lake City.

The Artificial Intelligence Policy & Learning Laboratory was created during the 2024 Legislative Session from Senate Bill 149, sponsored by Senator Kirk Cullimore and Rep. Jefferson Moss. It's charged with taking a scientific and methodical approach to AI policy by gathering experimental data from companies and academics to determine which policies work before recommending them to the legislature.

In June 2023, Sen. Cullimore and Rep. Moss assembled an AI task force composed of legislative leadership, AI entrepreneurs, researchers in academia, and members of the state's executive branch, including Margaret Busse, Executive Director at the Utah Department of Commerce.

Rep. Moss described how he is frequently asked while traveling around the country by other legislators and their staffs how Utah legislators are able to accomplish seemingly impossible legislative feats such as crafting SB 149 that, among other things unites industry and government with a seemingly contradictory goal, in this case, protecting consumers against the AI threats while at the same time promoting innovation in the tech sector. "In our legislature we don't really have those issues," said Moss. "Believe it or not, we actually like each other and we get along really well. We don't sit across the aisle thinking how we can one-up each other. We literally sit together and try to come up with the right solutions."

"AI innovation is happening at a breakneck pace—faster than anything we've ever seen," said Margaret Busse. "It's very difficult for government to stay on top of it. We want to be able to stay on top of it as much as possible by proactively observing and learning."

The task force talked with AI experts from Utah and around the world, distilled their findings, and formulated three primary goals:

- Foster Innovation

- Protect Citizens

- Observe and Learn

"We need technology, but we need it to work for us," said Busse "We are putting appropriate guardrails in place to enable us to trust technology."

The two main functions of this new office is to offer regulatory relief where needed, and through study and collaboration with industry, make recommendations to the state legislature through a learning lab approach.

The Lab's activities are designed to build trust amongst the various AI stakeholders including the tech sector, state government and universities through data-driven policy, agile regulatory adaptation and regulatory relief.

The goal is to work closely with AI companies to understand how their technology can affect the community and society at large while putting in place appropriate regulation where needed. Protecting consumers is one of the Department of Commerce's key missions; protecting the public from AI-enabled deception, fraud and other threats is a part of that mission.

The Lab's purpose is to understand the ever-evolving and complex AI landscape and use that understanding to inform policies that could be effective in mitigating threats caused by AI.

Governor Spencer Cox discussed the emergence of new technologies, their adoption over time, and how humans tend to take a long time to adapt to them. Citing the 2016 Thomas Friedman book, "Thank You for Being Late: An Optimist's Guide to Thriving in the Age of Accelerations," Governor Cox explained that society's ability to "assimilate and understand the reaches of new technology still takes at least a decade." For example, the harms of social media are only now being recognized well over a decade after the technology was introduced. One of the limitations Utah policy-makers have, he said, is the inherent limitation of a state legislature that meets only once a year for 45 days to formulate policies and regulations for a new technology such as AI that is both complex and rapidly evolving—more so than anything society has seen before.

"Collectively, we've come up with a new model—and I think it's unique in governance anywhere in the country, and maybe anywhere in the world," said Governor Cox. "It's not government versus innovators; rather it's government working with innovators. We're giving AI innovators a space and confidence to come in and show they can do. We then work collaboratively on the very regulations that will protect the marketplace, protect our citizens from harms that can come from that AI, and give policy recommendations to the legislature in real time—and not just during a 45 day legislative session."

He continued, "The world will present you with a false choice: you either regulate these companies and protect everyone, or you innovate and just let people do their thing. We said no, there's got to be a better way. What if we worked collaboratively to build trust, to find the parameters of where we need protections, to make sure we're not over-regulating or under regulating, but doing it so that everybody has a fair playing field and an opportunity to participate in this this incredible innovation—AI—that can help us achieve our wildest dreams and maybe solve some of the greatest conundrums that have impacted humankind."

Margaret Busse explained that the Lab will focus its efforts on several "learning agendas" involving "regulatory gray areas as they relate to generative AI technologies." Generative AI's impact on mental health services will be the first focus area.

The Department of Commerce manages the licensure process for mental health therapy. Busse pointed out certain "regulatory gray areas" that have emerged as a result of AI-enabled chatbots being involved with mental health services.

And she raised questions regarding chatbots and licensure:

"What kinds of activities conducted by chatbots must be licensed?"

"What are the guardrails that need to be put in place with respect to potentially private and intimate data that's now being communicated with the bot?"

"How do we determine what is and what's not appropriate with respect to the types of activities that these bots are allowed to do?"

The first application for regulatory mitigation program is a Lehi-based startup, ElizaChat, that does just that. Its an AI-enabled mental health provider for young people. Founded in 2023, ElizaChat recently received a $1.5 million seed investment from unnamed investors. Its Co-founder and CEO, Dave Barney, was a featured speaker at the event.

"I can't think of a bigger problem to solve than meeting that supply-demand gap between the growing demand for mental health services which is outpacing the supply of mental health services," said Barney.

He continued, "the area of Mental Health Services is ripe for technological disruption, which is what we're trying to do, but it is important to us that we do it right. As a father, I don't want some random AI doing psychotherapy on my children without making sure that it's done the right way. We were excited when we heard about the opportunity to work closely with government—not in a way where the more we tell them, the more we're going to be restricted. Rather, it is the opposite. The more we tell them, show them, and work with them, the better opportunity we have to shape policy and create an environment where we can apply AI to mental health safely."

The Lab invites companies to apply for regulatory mitigation if they are concerned their product or technology may trigger regulatory actions.

To learn more about the Office of Artificial Intelligence Policy & Learning Laboratory, to apply for regulatory mitigation, or to share positive or negative stories and examples of AI, visit ai.utah.gov.